Mistral AI Models Pricing Calculator

Estimate and compare the costs for Mistral AI's newest models including Mistral Small Creative, Devstral 2 2512, Ministral 3 14B 2512, Ministral 3 8B 2512, Ministral 3 3B 2512 and more. Specify your input token count, output token count and number of API calls to get a cost estimate.

Best LLMs for OpenClaw— Vote for which model works best with OpenClaw

112 out of our 301 tracked models have had a price change in February.

Get our weekly newsletter on pricing changes, new releases, and tools.

Current Pricing (per 1M tokens)

27 models

Provider | Model | Input $/M | Output $/M | Est. Input | Est. Output | Total | Actions |

|---|---|---|---|---|---|---|---|

MI Mistral AI | mistral-nemo | $0.020 | $0.040 | - | - | - | |

MI Mistral AI | mistral-small-3.1-24b-instruct | $0.030 | $0.110 | - | - | - | |

MI Mistral AI | devstral-2512 | $0.050 | $0.220 | - | - | - | |

MI Mistral AI | mistral-small-24b-instruct-2501 | $0.050 | $0.080 | - | - | - | |

MI Mistral AI | mistral-small-3.2-24b-instruct | $0.060 | $0.180 | - | - | - | |

MI Mistral AI | mistral-small-creative | $0.100 | $0.300 | - | - | - | |

MI Mistral AI | ministral-3b-2512 | $0.100 | $0.100 | - | - | - | |

MI Mistral AI | voxtral-small-24b-2507 | $0.100 | $0.300 | - | - | - | |

MI Mistral AI | devstral-small | $0.100 | $0.300 | - | - | - | |

MI Mistral AI | mistral-7b-instruct-v0.1 | $0.110 | $0.190 | - | - | - | |

MI Mistral AI | ministral-8b-2512 | $0.150 | $0.150 | - | - | - | |

MI Mistral AI | ministral-14b-2512 | $0.200 | $0.200 | - | - | - | |

MI Mistral AI | mistral-saba | $0.200 | $0.600 | - | - | - | |

MI Mistral AI | mistral-7b-instruct | $0.200 | $0.200 | - | - | - | |

MI Mistral AI | mistral-7b-instruct-v0.3 | $0.200 | $0.200 | - | - | - | |

MI Mistral AI | mistral-7b-instruct-v0.2 | $0.200 | $0.200 | - | - | - | |

MI Mistral AI | codestral-2508 | $0.300 | $0.900 | - | - | - | |

MI Mistral AI | mistral-medium-3.1 | $0.400 | $2.000 | - | - | - | |

MI Mistral AI | devstral-medium | $0.400 | $2.000 | - | - | - | |

MI Mistral AI | mistral-medium-3 | $0.400 | $2.000 | - | - | - | |

MI Mistral AI | mistral-large-2512 | $0.500 | $1.500 | - | - | - | |

MI Mistral AI | mixtral-8x7b-instruct | $0.540 | $0.540 | - | - | - | |

MI Mistral AI | mistral-large-2411 | $2.000 | $6.000 | - | - | - | |

MI Mistral AI | mistral-large-2407 | $2.000 | $6.000 | - | - | - | |

MI Mistral AI | pixtral-large-2411 | $2.000 | $6.000 | - | - | - | |

MI Mistral AI | mixtral-8x22b-instruct | $2.000 | $6.000 | - | - | - | |

MI Mistral AI | mistral-large | $2.000 | $6.000 | - | - | - |

* Some models use tiered pricing based on prompt length. Displayed prices are for prompts ≤ 200k tokens.

How to Use the LLM Pricing Calculator

Step-by-Step Guide

Choose Your Measurement

Select tokens for precision, words for content planning, or characters for short-form content.

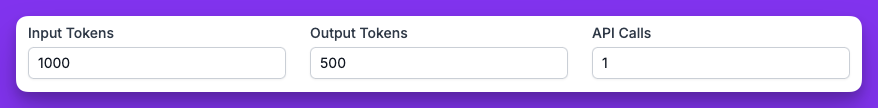

Enter Your Numbers

Input your expected prompt length, desired response size, and number of API calls:

- Prompt length: How much text you'll send to the AI (your question or instructions)

- Response size: How much text you expect the AI to generate back

- API calls: How many times you'll make this request (for total project cost)

Compare Results & Analyze Breakdown

Review your pricing analysis:

- Model comparison: Compare costs of all tracked models in the table below

- Cost breakdown: See separate input vs output costs and total per-call expenses

- Optimization: Use the data to optimize your usage and choose the most cost-effective model

Understanding Input Types

Tokens

The most precise measurement. Tokens are the basic units AI models process - roughly 0.75 words or 4 characters each.

Words

Standard text measurement. Perfect for writers estimating content costs. Converted to ~1.3 tokens per word.

Characters

Ideal for social media or short-form content. Converted to ~0.25 tokens per character.

Calculator Components

Input

Text you send to the AI model API (your prompt)

Output

Generated response from the model

API Calls

Number of requests you'll make to calculate total project cost

Pro Tips

Pricing data is updated daily from OpenRouter to ensure accuracy

Multiply the per-call cost by your expected usage volume for total project estimates

Use the comparison table to find the most cost-effective model for your specific use case

Consider both input and output costs - some models have different pricing ratios

Frequently Asked Questions

Everything you need to know about AI pricing and tokens