LLM Pricing MCP Server for Claude Code and Cursor

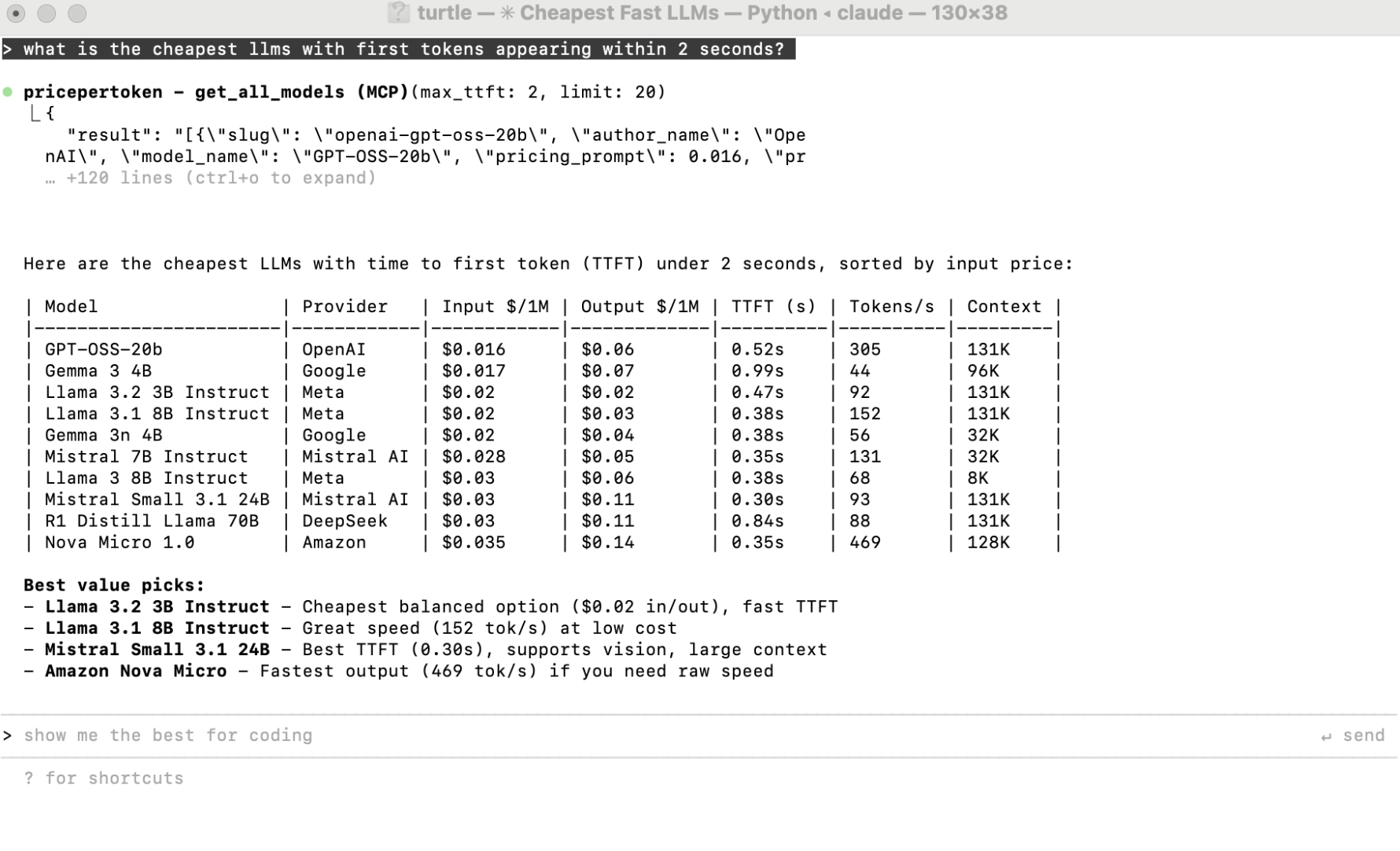

Access real-time LLM pricing, benchmark and speed data directly in Claude Code, Cursor, Windsurf, and other MCP-enabled AI assistants.

How the MCP Server Works

Our MCP server provides your coding assistant access to all of our data including pricing, benchmark, latency and endpoint availability. Make informed decisions without leaving your workflow.

Available Tools

Query pricing, compare models, and access benchmark data with these tools:

| Tool | Description |

|---|---|

get_all_models | Get pricing for all models with filtering by author, context length, TTFT, speed, or capabilities |

get_model | Get detailed info for a specific model including all pricing tiers, benchmarks, and latency |

compare_models | Side-by-side comparison of multiple models by slug |

get_benchmarks | Get models ranked by a specific benchmark (coding, math, intelligence, etc.) |

get_providers | List all providers with model counts and price ranges |

search_models | Search models by name, author, or description |

Setup

Choose your AI assistant below for setup instructions. No API key required.

C Claude Code

Run this command in your terminal:

claude mcp add pricepertoken --transport http --url https://api.pricepertoken.com/mcp/mcpCu Cursor

Add this to your ~/.cursor/mcp.json:

{

"mcpServers": {

"pricepertoken": {

"url": "https://api.pricepertoken.com/mcp/mcp"

}

}

}C Claude Desktop

Add this to your claude_desktop_config.json:

{

"mcpServers": {

"pricepertoken": {

"command": "npx",

"args": [

"mcp-remote",

"https://api.pricepertoken.com/mcp/mcp"

]

}

}

}W Windsurf

Add this to your ~/.codeium/windsurf/mcp_config.json:

{

"mcpServers": {

"pricepertoken": {

"serverUrl": "https://api.pricepertoken.com/mcp/mcp"

}

}

}Example Prompts

Once connected, try asking your AI assistant:

"What is the cheapest model with 100k+ context?"

"Compare GPT-4o and Claude 3.5 Sonnet pricing"

"Show me the top 10 models for coding benchmarks"

"Find the fastest models with TTFT under 2 seconds"

"What Anthropic models support vision?"

Frequently Asked Questions

Do I need an API key?

No, the Price Per Token MCP is free to use and doesn't require authentication.

How often is pricing data updated?

Our pricing database is updated regularly as providers announce changes. You always get the latest data.

What models are included?

We track pricing for 100+ models from OpenAI, Anthropic, Google, Meta, Mistral, and many more providers. View all on our LLM pricing page.

Can I compare model benchmarks?

Yes, use the get_benchmarks tool to rank models by coding, math, or intelligence scores. See our LLM rankings for more.

Is there a rate limit?

There's no strict rate limit, but please use responsibly. If you need high-volume access, contact us.

Ready to get started?

Set up the MCP in under a minute and start making data-driven model decisions.

Built by @aellman

Tools

Directories

Pricing

Rankings

- All Rankings

- Best LLM for Coding

- Best LLM for Math

- Best LLM for Writing

- Best LLM for RAG

- Best LLM for OpenClaw

- Best LLM for Cursor

- Best LLM for Windsurf

- Best LLM for Cline

- Best LLM for Aider

- Best LLM for GitHub Copilot

- Best LLM for Bolt

- Best LLM for Continue.dev

- MMLU-Pro

- GPQA

- LiveCodeBench

- Aider

- AIME

- MATH (Hard)

- Big-Bench Hard

2026 68 Ventures, LLC. All rights reserved.